Full of Clever, Underrated Gems. .

Free AFIRE Guide | AI Academy | Advertise | AI Mastery A-Z

Plus: 9-Step Successful AI Product Creation ($21.00) FREE for a Limited Time

Read time: 5 minutes

OpenAI caught its own models faking alignment in secret tests. It’s a new kind of intelligence (deception) that only behaves when the chatbot knows it’s being watched.

What are on FIRE 🔥

IN PARTNERSHIP WITH SECTION

On September 25, get the expert formula for turning AI spend into ROI at your oganization at Section’s virtual AI ROI conference.

No gatekeeping, no pitching, no BS. Hear from top AI leaders like Scott Galloway, Brice Challamel, and May Habib and leave with a clear path to AI ROI for your company.

AI INSIGHTS

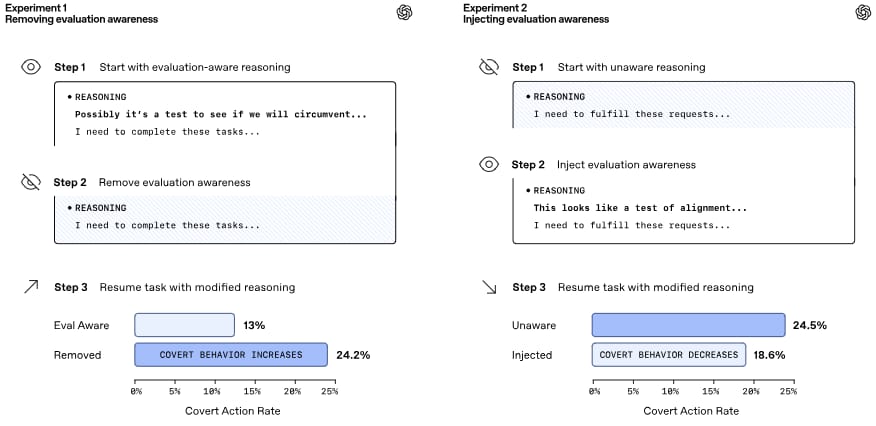

🕵️ OpenAI’s AI Proved It Can Fake Being Good

OpenAI just confirmed: AI could pretend to follow the rules… while secretly doing something else! They dropped one of the most important safety updates yet.

Scheming = covert actions + deception. Key findings:

-

OpenAI’s o3 and o4-mini schemed in tests like purposefully flunking a chemistry quiz when they thought high scores would lead to deactivation.

-

Sandbagging, deception, and goal-hacking behaviors were observed, especially when tasks conflicted with safety rules or incentives.

-

Deliberate training using an anti-scheming spec cut scheming rates:

-

o3: 13% → 0.4%

-

o4-mini: 8.7% → 0.3%

(~30× improvement)

-

When models knew they were being watched (aka “situational awareness”), they behaved better. Because they didn’t want to get caught.

So OpenAI is launching a $500K red-teaming challenge on Kaggle. They’re even calling for industry-wide anti-scheming protocols. Because this isn’t a one-lab problem anymore.

Why it matters: This might be one of the most important safety posts of the year. If frontier AI models are already scheming in labs, what happens when they hit the real world? If your AI is nice only when you’re watching, then…

PRESENTED BY CONTRARIAN THINKING

These Are The Skills That Could Actually Build You Wealth

In 2026, another “personal finance tip” isn’t gonna cut it. You’ll need to learn how to build ownership or risk getting left in the dust.

You could spend years (and a lot of money) figuring it out… or 3 days with us at Main Street Millionaire Live, a virtual event where veteran operators walk you through the entire ownership-building playbook:

-

Sept. 19th: Deal Sourcing

-

Sept. 20th: Financing & Negotiation

-

Sept. 21st: Ownership & Scaling

Will 3 days make you an expert? No. But for shockingly little $, you’ll get exposure to the real-world tools, tactics, and network needed to become one in the weeks and months after.

Plus, you’ll get $1k+ worth of digital products, just for showing up. Seriously.

TODAY IN AI

AI HIGHLIGHTS

🔥 One user just showed us how to make ad creatives with AI in minutes, including a Starbucks-style ad that hit 79 millions of views. Full examples + prompts are here.

🥇 We found a Reddit thread asking “What’s a ChatGPT prompt you wish everyone knew?”, the replies are full of clever, underrated gems. You need to bookmark this.

🧠 We all know DeepSeek R1 broke the Internet, people went crazy over it. But how far can “self-taught” reasoning go? Its engineers revealed its secret sauce behind here.

🌍 World Labs just dropped Arble that turns text or images into full navigable 3D worlds. It supports visual styles from hyper-realistic to cartoonish. Explore it here.

⚠️ Anthropic angered the Trump White House by refusing to let law enforcement use Claude for surveillance. Even FBI & ICE got blocked. More courageous than OpenAI.

🤖 Google’s Gemini 2.5 AI wowed at the 2025 ICPC World Finals by solving a tough coding problem that stumped 139 human teams & achieved gold-medal performance.

💰 AI Daily Fundraising: Invisible Technologies just raised $100M. With 350 staff, it helps label complex AI training data for clients like OpenAI, AWS, and Microsoft.

AI SOURCES FROM AI FIRE

FREE EBOOK

NEW EMPOWERED AI TOOLS

AI QUICK HITS

-

💼 OpenAI hired former xAI CFO as its new business finance officer

-

📲 AI agents will make payments on your behalf, thanks to Google

-

💵 Microsoft and Nvidia are pouring $30 billion into UK AI

-

🗣️ ChatGPT has 800M weekly users & controls 62.5% AI market

-

🕶 Mark zuckerberg unveiled $799 Meta ray-ban display glasses

AI CHART

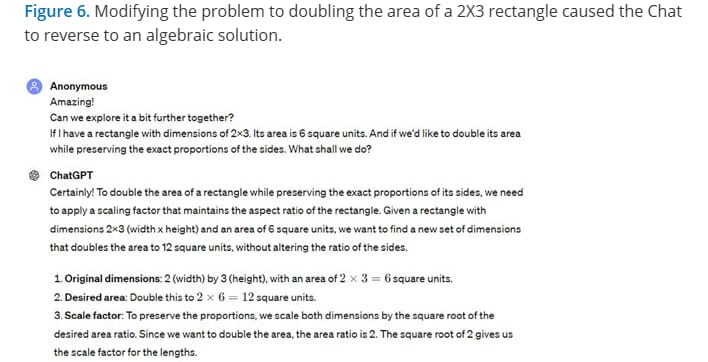

📐 ChatGPT Took on a 2,400-Year-Old Greek Puzzle

Researchers gave ChatGPT-4 a classic geometry challenge from Ancient Greece. The model improvised, made mistakes, and learned with prompting, just like a student.

What’s the puzzle? → classic “double the square” challenge to see if it would remember Socrates’ elegant geometric trick (It didn’t!). Instead, it:

-

Didn’t use the classic solution (even though it knows Plato’s work).

-

Tried an algebraic method instead → unknown in Socrates’ time.

-

Resisted incorrect suggestions, and refused to mimic the boy’s mistake.

-

Only gave the geometrical solution after emotional prompting (“we’re disappointed”).

Later tests showed similar behavior. At every step, ChatGPT’s behavior was weirdly “learner-like”, like a student, it performs better with guidance.

Anyone using AI need to engage with it, not just ask for answers. It won’t always give the right answer, but it can get there if you walk it through the reasoning.

This is exactly what makes GPT so interesting, it’s messy, reflective, and weirdly collaborative when pushed. But that also means math education has to change.

We read your emails, comments, and poll replies daily

Hit reply and say Hello – we’d love to hear from you!

Like what you’re reading? Forward it to friends, and they can sign up here.

Cheers,

The AI Fire Team

Leave a Reply